This is not a battle of who has the best server AI, it’s whether you need server AI

There has been much criticism (or at least pessimistic mulling) of Apple’s AI strategy in light of Google and Facebook’s advances, namely that Apple hasn’t properly invested in the infrastructure/know-how/talent they need to compete. Today at WWDC I saw a clue of why that might not be the case.

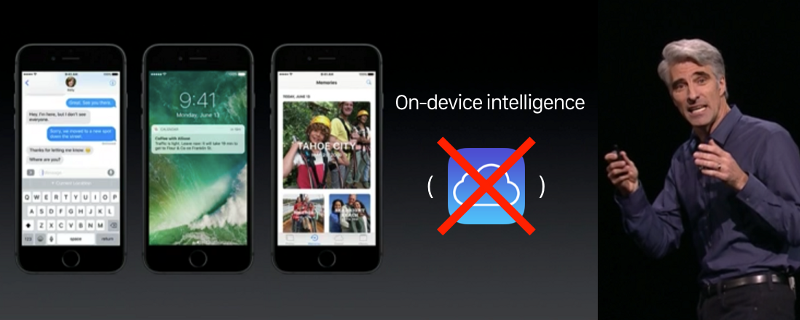

Rather than having a cloud-based solution that processes everyone’s info and sends it back to each device, Apple’s approach seems to be all about never leaving your device in the first place.

Do you need powerful centralized infrastructure to handle a billion simultaneous users if you have a billion baby pocket computers, each responsible for just one user?

If each iPhone is processing one user’s photos, texting habits, etc., you have a perfectly distributed, scalable solution that guarantees a 1-to-1 ratio of user-to-processing power. Add a user/device, and you have added the exact amount of computing power you need to handle that new user. You cut out upload/download time and queuing + save on data charges. Best of all a system like this is not reliant on Apple being good at cloud-based AI.

What is Apple good at? Building powerful integrated software that runs locally on powerful integrated hardware.

As usual, Benedict Evans was (2 days) ahead of the rest of us:

I’m already theorizing about how it all works — like after the local processing happens do the “learnings” get aggregated on the cloud, improved over time, and updates are periodically beamed back to the army of devices? Most importantly, will it work??

Who knows. But a distributed system of client-side AI is a starkly different approach then Google’s or Facebook’s, and it plays to Apple’s strengths perfectly.